Enable Live Streaming Mode

With the THETA off, press and hold the mode button. Keep pressing mode and then press power. The camera will go into live streaming mode.

With the camera in live streaming mode, the word Live will appear in blue.

Connect Camera to Computer

Plug the camera into your computer with a micro USB cable. It will appear as a normal webcam. The camera will be called RICOH THETA S.

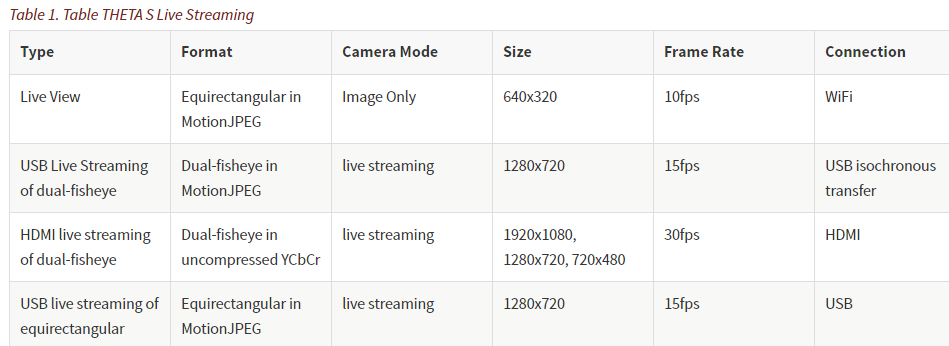

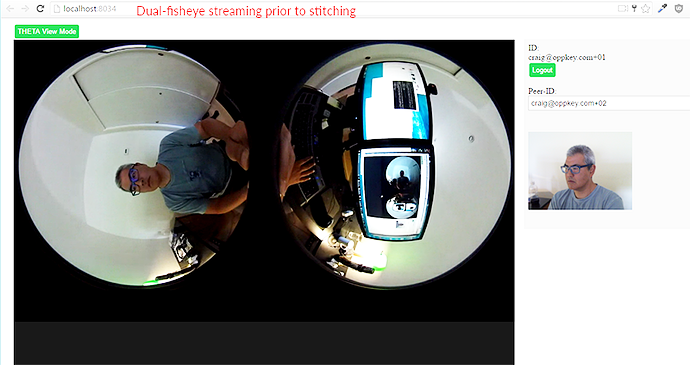

The THETA is now streaming in dual-fisheye mode. The stream is 1280x720 at 15fps. The data is in MotionJPEG format.

Install Live Streaming Software

To stream the video to YouTube, install the official RICOH Live-streaming app and OBS.

Note

Many software can be used to stream to YouTube. Refer to YouTube Live Verified Devices and Software for more information.

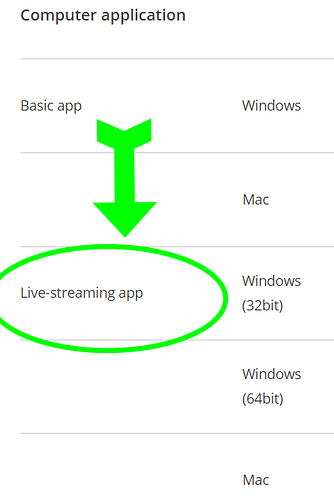

Download and Install RICOH Live-Streaming App

-

Select Windows 32bit, Windows 64bit, or Mac

-

Turn your THETA off

-

Unplug THETA from your computer

-

Install software

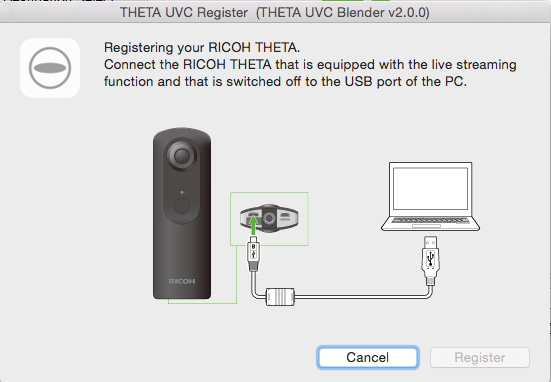

With the THETA turned off, the software will prompt you to reconnect the THETA to register your camera.

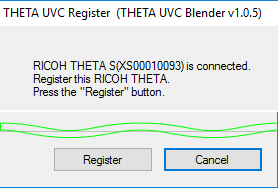

After you connect your THETA, a Register button will appear.

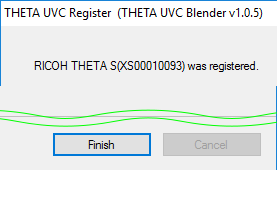

Complete the registration.

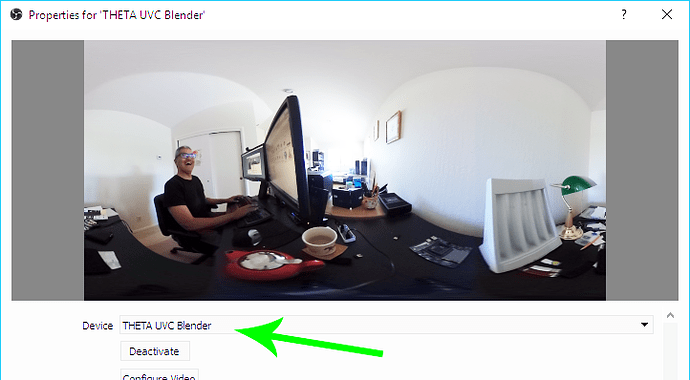

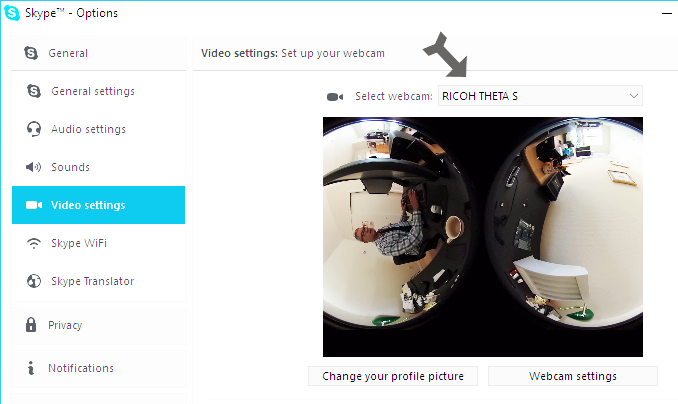

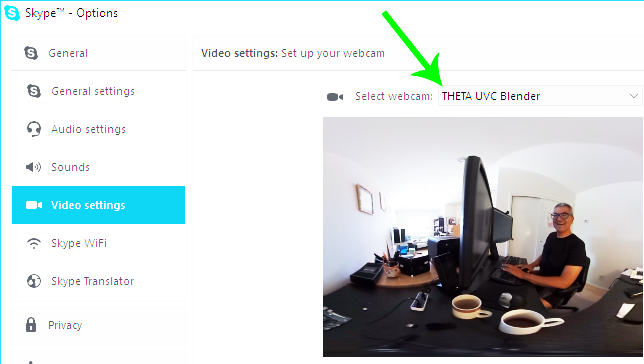

Test the THETA UVC Blender driver with any software that works with a webcam. In the example below, I am using Skype.

Caution

Make sure you select THETA UVC Blender and not RICOH THETA S.

Note

In Skype, the video does not have 360° navigation (as of Oct 2016) and it will look like a distorted rectangle. Skype is for testing only, not for use.

Download and Install OBS

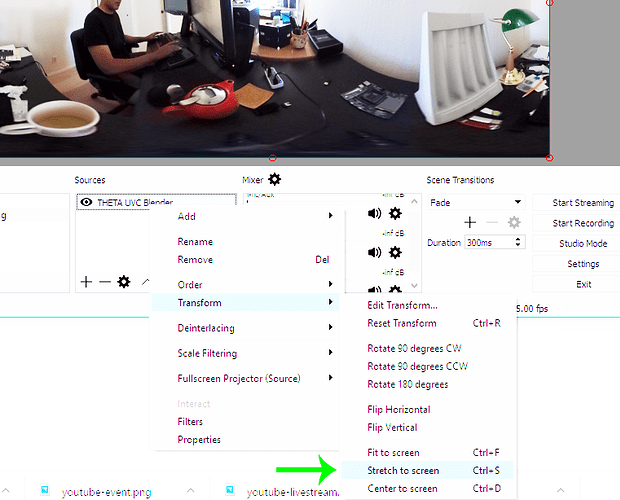

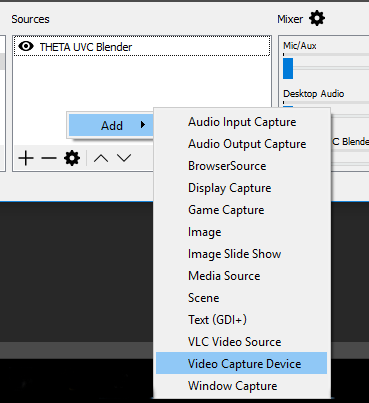

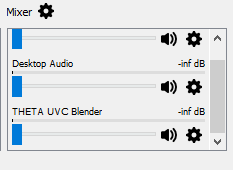

Create a new Scene. Any name is fine. Click on the plus sign. Under Sources, add THETA UVC Blender (any name is fine) and add a video capture device. Right click to open the pop-up menu.

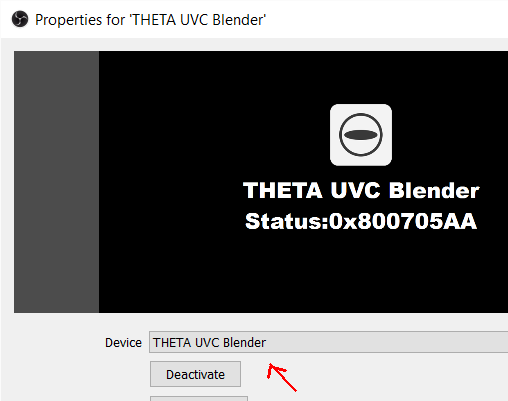

Select THETA UVC Blender as the Device. Verify that the video stream is in equirectangular format.

Tip

If you see a black screen that says Status:0x800705AA, try to toggle your device between your two webcams. If you still see the error, disconnect all other webcams or disable the webcam on your laptop and then reboot your computer. The error above indicates that a connection is not established. See Troubleshooting section below

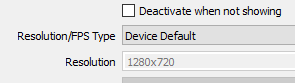

Leave the Resolution/FPS Type as Device Default.

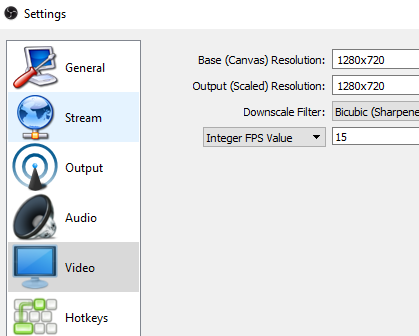

Under Settings → Video, set the resolution to 1280x720.

Note

As of November 2016, the maximum resolution for UVC Blender is 1080p. Please check the maximum resolution and adjust your settings if needed.

Select Stretch to screen.

Create a YouTube Live 360° Event

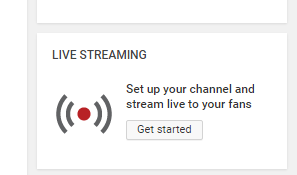

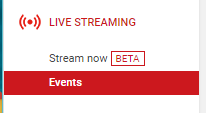

Log into YouTube. Click on the Upload button. Click the Get started button on live streaming.

Select Events.

Warning

Make sure you select Events. You will not get a 360° stream with Stream now.

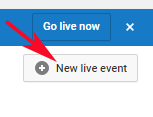

In the right side of the screen, select New live event.

Add a title.

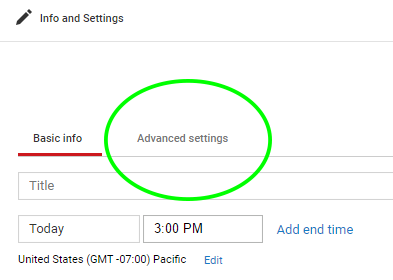

Select Advanced Settings

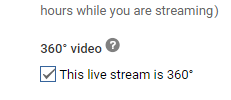

Select This live stream is 360.

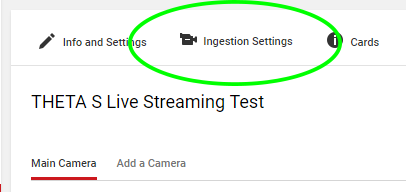

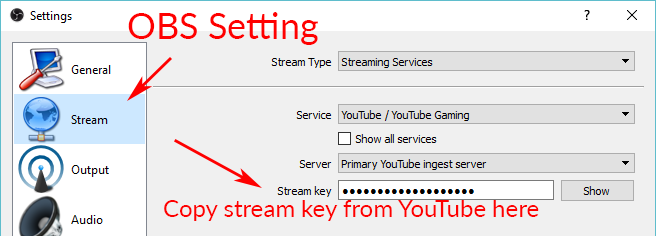

Grab stream name from Ingestion Settings

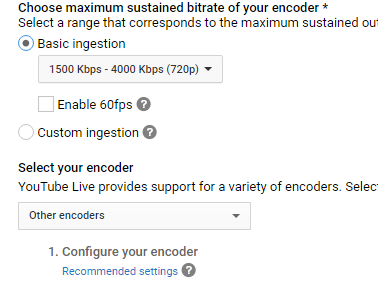

Once you click on Basic ingestion information on your encoder will open up.

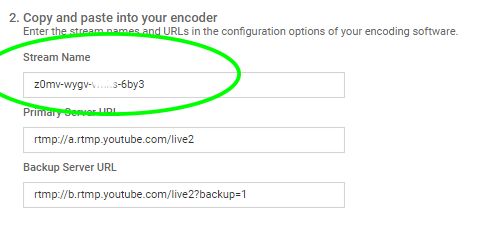

Copy the stream name. You will need this for OBS. In OBS, it is called, Stream key.

Open OBS, go to Settings → Stream. Paste the YouTube stream name into the box on OBS called, Stream key.

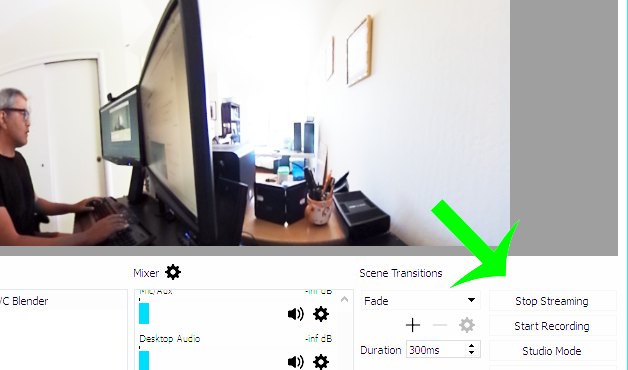

On the main OBS front control panel, press Start Streaming in the right hand side of the control panel.

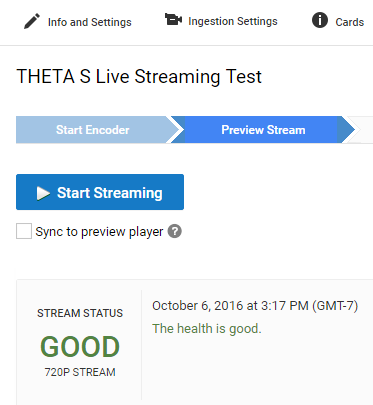

On YouTube, go to the Live Control Room and click Preview Stream.

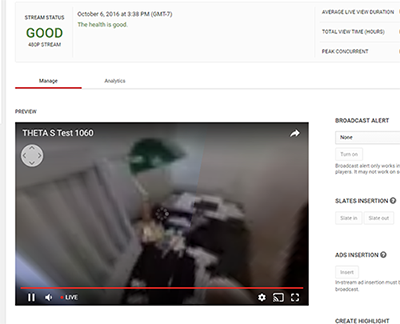

You can preview the stream if you have good bandwidth. I have limited upstream bandwidth in my office. I reduced the ingestion bandwidth, making my resolution lower.

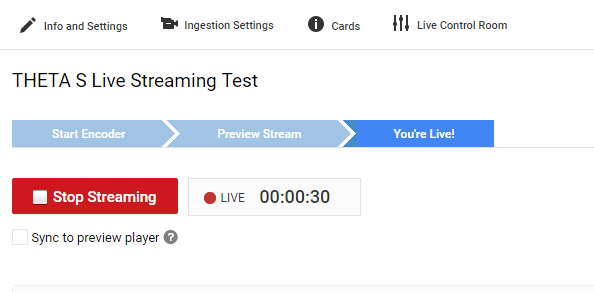

When you’re ready, start the stream.

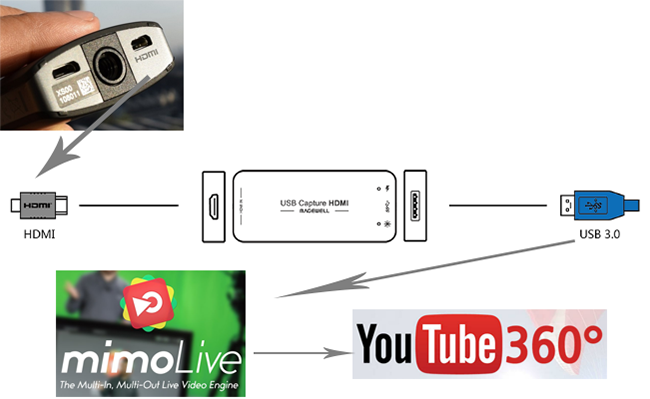

Using HDMI

Using USB output for live streaming, you will get a maximum resolution of 720p. If you save your video files to your camera, the resolution will be 1920x1080. If you save still images as timelapse, you can get 5376x2688, which will be displayed as 4K on YouTube.

The THETA S has an HDMI port that can output 1920x1080 at 30fps. In order to use this signal, you need to use something like Blackmagicdesign UltraStudio for Thunderbolt.

Once you get the video stream onto your computer, it will be in dual-fisheye. To get this into equirectangular, you will need to use a third-party product such as Streambox Cloud Encoder or MimoLive.

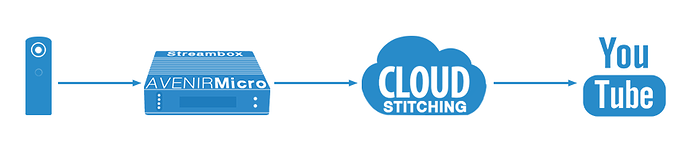

Streambox

This is the workflow.

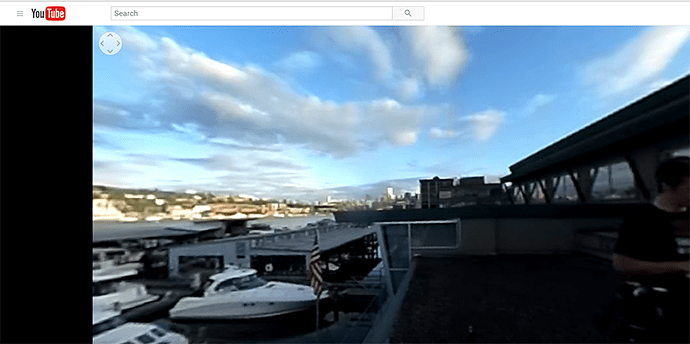

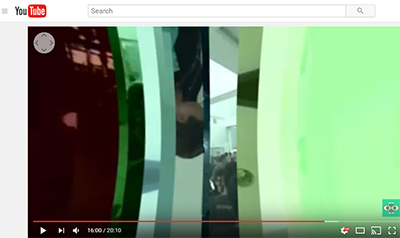

This is a sample of the live stream using a THETA.

This is the equipment and service list used:

-

Streamed live using Streambox Cloud Encoder

-

RICOH THETA S Camera

-

BlackMagic UltraStudio Mini Recorder

-

MacBook Pro with USB Modems

-

Streambox Cloud

mimoLive

Boinx Software offers mimoLive.

They have a good video that provides an overview of their service specifically for the THETA S.

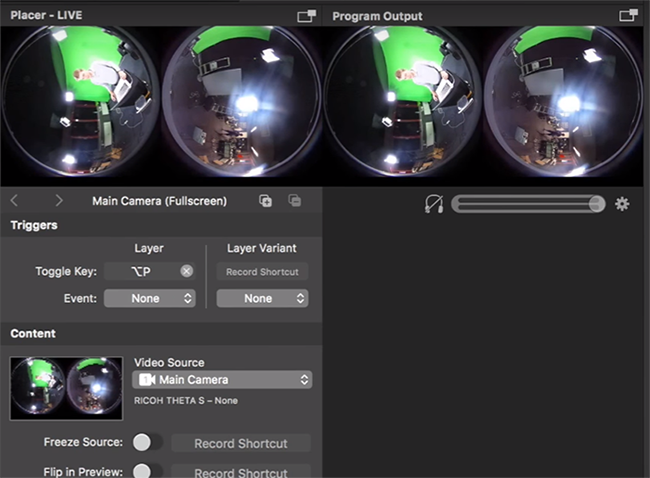

mimoLive can accept a USB or HDMI stream in dual-fisheye.

In order to use the HDMI output from the THETA, you will need a HDMI video grabber. Boinx Software recommends the Blackmagic Design [UltraStudio] (UltraStudio | Blackmagic Design) Mini Recorder for Thunderbolt or the Magewell USB Capture HDMI adapter for USB 3.

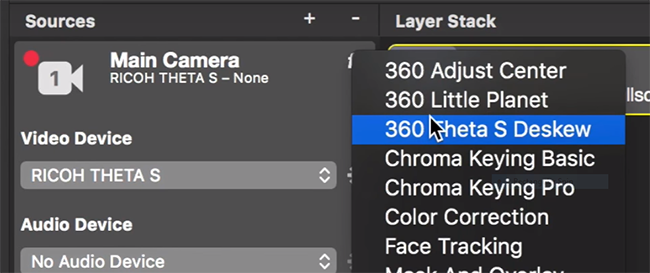

mimoLive can take the THETA S dual-fisheye video stream source and apply a filter convert it to equirectangular for streaming to places like YouTube Live 360 events.

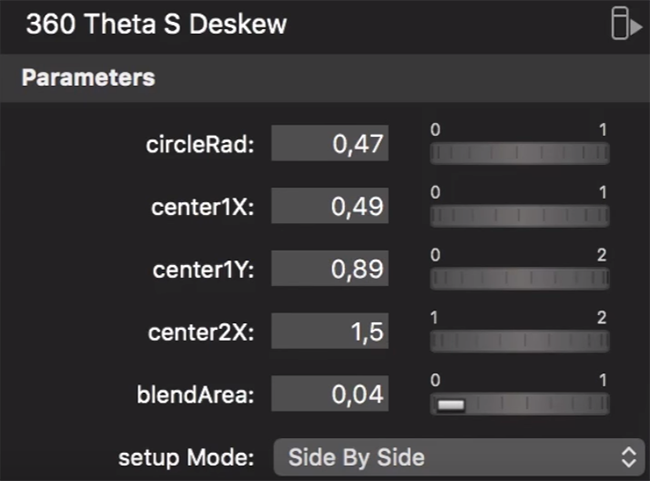

mimoLive provides sliders to adjust the sphere stitching. You’ll only be able to get a good enough stitch. The edges of the spheres will not match perfectly.

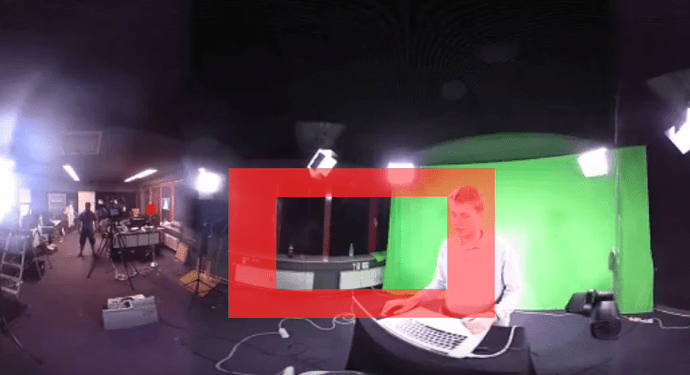

This is an example of the 360 live stream. The quality of the stitch is good.

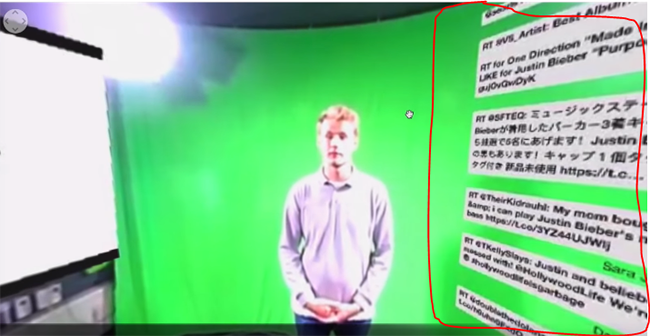

Even if you are using USB output, you still may want to use mimoLive instead of the free RICOH THETA UVC Blender app to take advantage of mimoLive features to add text, Twitter, and slides into the YouTube live streaming event.

You can also center your video stream.

Other

Videostream360 offers a service to use THETA at 1920x1080 with HDMI. They even sell the THETA on their site.

If you have a solution for HDMI 360° streaming and you’ve verified that it works with the THETA, please join the THETA Ecosystem and post information about it.

Tethered Streaming with Unity

Please refer to this separate article on using Unity with a tethered THETA.

Untethered WiFi Streaming

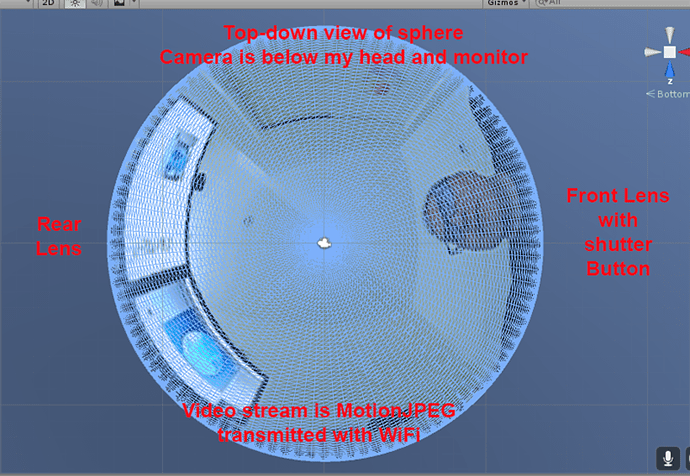

Streaming from the THETA using WiFi is primarily of interest to developers and hobbyists.

Using Unity

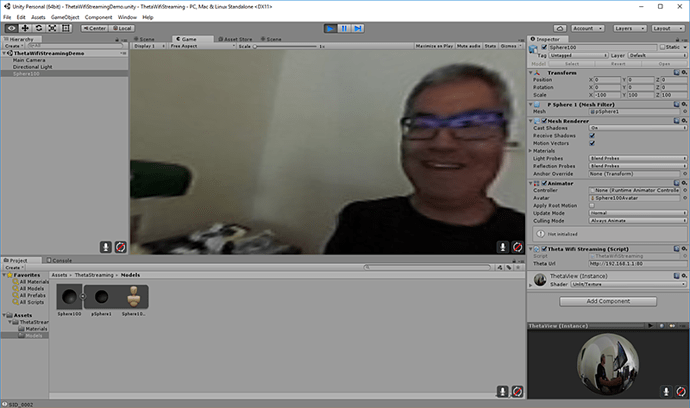

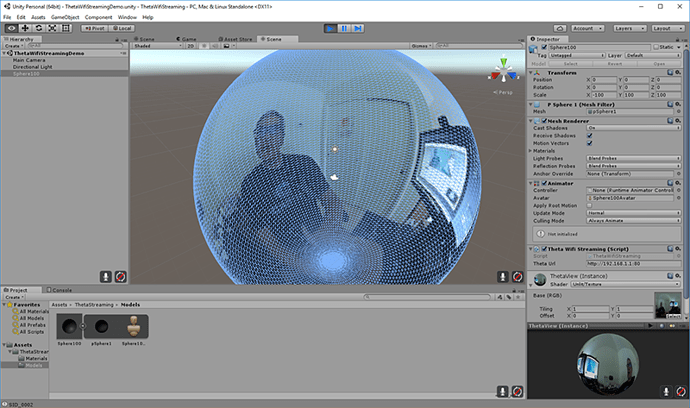

The THETA can live stream a 640x320 MotionJPEG at 10fps over WiFi. This is intended to preview a picture prior to taking the picture. It’s not intended for headset navigation. The community has built some solutions to stream this low-res, low fps video to mobile phones, primarily using Unity.

This is a short Vine video of a demo.

Most developers have challenges processing the MotionJPEG stream.

Fortunately, sample code of a THETA S WiFi streaming demo with Unity was developed by community member Makoto Ito. I’ve translated the README to his code as well as a related

blog written by @noshipu, CEO of ViRD, Inc for his contribution.

About the RICOH THETA API

In order to use Wifi live streaming, you must use the _getLivePreview API. [Official Reference] (https://developers.theta360.com/en/docs/v2.0/api_reference/commands/camera._get_live_preview.html)

NOTE from Craig: This was replaced by getLivePreview in version 2.1 of the API. This blog by Noshipu-san refers to the 2.0 API, which is still supported by the THETA S. Be aware of the differences in your code.

Unlike the other APIs, _getLivePreview is different because the data is in a stream and keeps going. You will not be able to get a WWW class to wait until the request is complete (maybe).

NOTE from Craig: This is the major problem developers have when working with

getLivePreview. As the data is a stream, you can’t want for the data to end before running your next command. For example, it’s different from downloading and displaying an image or video file because you know when the transfer is complete.

Processing Flow

Set the POST request to create a HttpWebRequest class

string url = "Enter HTTP path of THETA here";

var request = HttpWebRequest.Create (url);

HttpWebResponse response = null;

request.Method = "POST";

request.Timeout = (int) (30 * 10000f); // to ensure no timeout

request.ContentType = "application/json; charset = utf-8";

byte [] postBytes = Encoding.Default.GetBytes ( "Put the JSON data here");

request.ContentLength = postBytes.Length;

Generate a class of BinaryReader to get the byte data (you get the bytes one by one)

// The start of transmission of the post data

Stream reqStream = request.GetRequestStream ();

reqStream.Write (postBytes, 0, postBytes.Length) ;

reqStream.Close ();

stream = request.GetResponse () .GetResponseStream ();

BinaryReader reader = new BinaryReader (new BufferedStream (stream), new System.Text.ASCIIEncoding ());

Get the start and stop bytes of 1 frame of the MotionJPEG and cut out one frame

With the byte, check the partion value of the MotionJPEG.

...(http)

0xFF 0xD8 --|

[jpeg data] |--1 frame of MotionJPEG

0xFF 0xD9 --|

...(http)

0xFF 0xD8 --|

[jpeg data] |--1 frame of MotionJPEG

0xFF 0xD9 --|

...(http)

Please refer this answer on StackOverflow to How to Parse MJPEG HTTP stream from IP camera?

The starting 2 bytes are 0xFF, 0xD8. The end bye is 0xD9

The code is shown below.

List<byte> imageBytes = new List<byte> ();

bool isLoadStart = false; // Binary flag taken at head of image

byte oldByte = 0; // Stores one previous byte of data

while( true ) {

byte byteData = reader.ReadByte ();

if (!isLoadStart) {

if (oldByte == 0xFF){

// First binary image

imageBytes.Add(0xFF);

}

if (byteData == 0xD8){

// Second binary image

imageBytes.Add(0xD8);

// I took the head of the image up to the end binary

isLoadStart = true;

}

} else {

// Put the image binaries into an array

imageBytes.Add(byteData);

// if the byte was the end byte

// 0xFF -> 0xD9 case、end byte

if(oldByte == 0xFF && byteData == 0xD9){

// As this is the end byte

// we'll generate the image from the data and can create the texture

// imageBytes are used to reflect the texture

// imageBytes are left empty

// the loop returns the binary image head

isLoadStart = false;

}

}

oldByte = byteData;

}

Texture Generation Separated by Byte

This is the byte to reflect the texture.

mainTexture.LoadImage ((byte[])imageBytes.ToArray ());

Portion of Python code taken from StackOverflow answer.

import cv2

import urllib

import numpy as np

stream=urllib.urlopen('http://localhost:8080/frame.mjpg')

bytes=''

while True:

bytes+=stream.read(1024)

a = bytes.find('\xff\xd8')

b = bytes.find('\xff\xd9')

if a!=-1 and b!=-1:

jpg = bytes[a:b+2]

bytes= bytes[b+2:]

i = cv2.imdecode(np.fromstring(jpg, dtype=np.uint8),cv2.CV_LOAD_IMAGE_COLOR)

cv2.imshow('i',i)

if cv2.waitKey(1) ==27:

exit(0)

Mjpeg over http is multipart/x-mixed-replace with boundary frame info and jpeg data is just sent in binary. So you don’t really need to care about http protocol headers. All jpeg frames start with marker 0xff 0xd8 and end with 0xff 0xd9. So the code above extracts such frames from the http stream and decodes them one by one. like below.

...(http)

0xff 0xd8 --|

[jpeg data] |--this part is extracted and decoded

0xff 0xd9 --|

...(http)

0xff 0xd8 --|

[jpeg data] |--this part is extracted and decoded

0xff 0xd9 --|

...(http)

Testing WiFi Streaming

You can test out WiFi Streaming without having to program. Download and install Unity Personal

Edition. It’s free.

Get Makoto Ito’s code for ThetaWifiStreaming.

Press Play.

Using a Raspberry Pi

A Raspberry Pi can take the video live stream from the THETA using USB and transmit the stream to another device using WiFi. This is intended for software developers to use as starting point.

There is sample code available for both the transmission of the live stream and the conversion of the live stream into a navigable 360 video. Both the browser and the server applications are written in JavaScript. The server application uses node.

The sample code uses JavaScript to convert the dual-fisheye video stream into a navigable 360° video. Transmission uses WebRTC.

![stream conversion done in browser] (/uploads/default/original/1X/606077a81887bf389f9f012efd49b68ee19432ee.png)

FAQ

Q: What’s the Resolution and FPS?

A: Updated Oct 2016.

Q: Can I stream from a drone to a headset?

A: Only with expensive equipment. This is not a good use of the THETA for recreational hobbyists.Refer to this article for more information.

Q: Does the THETA have auto-stabilization?

A: No. You’ll need to use a third-party gimbal.

Q: Is anyone using the THETA 360° stream for object recognition?

A: Yes. Most people use the raw video from 2 fisheye spheres. Most people do not convert to equirectangular video. Just extract a portion of the sphere and perform the image recognition or measurement on that section. The HDMI stream has higher resolution. Most people are using

that and extracting a frame, then performing the calculation. Known applications include facial recognition, audience emotion recognition, autonomous vehicle operation. As just one example, the winner of the RICOH prize at the 2016 DeveloperWeek Hackathon used the Microsoft Emotion API on the dual-fisheye spheres.

Q: Is anyone working on panoramic sound?

A: Yes. There are many projects for 3D sound, including SOPA, an open source JavaScript library.

Q: How do I increase the sound quality?

A: Use an external microphone and add it to your mixer. Set the THETA’s input to zero using your mixer. If you’re using OBS for the stream, plug your microphones into your computer and then add a new audio source from the main dashboard to your stream. There is no way to plug a microphone directly into the THETA.

Troubleshooting

Streaming to YouTube

Problem: Status:0x800705AA

-

Verify your firmware is 01.42 or above

-

Make sure your camera has the blue word

Livein LED lights on -

Toggle between webcam and UV Blender. If this still fails to resolve the problem, disable all other webcams and reboot

-

Try a different USB cable. Plug it into the port on the back of your computer

Problem: Screen is black with nothing on it

Check video resolution. Set to 1280x720

Problem: Video on YouTube is Equirectangular with No Navigation

If the stream is in equirectangular on OBS and it can’t be navigated on YouTube, check your YouTube configuration.

Heat

The unit below overheated 16 minutes into the shoot. It is using UVC Blender and a USB cable during an indoor shoot at Stanford during a crowded VR event.

If the THETA is overheating, point a standard household fan at it. The airflow may be enough to cool the outside of the THETA and help with the internal overheating.

People have reported success by sticking $6 Raspberry Pi heatsinks onto the body of the THETA or taping or attaching a small fan used for computer CPUs to the outside of the THETA.

-

6 piece Addicore heatsink for Raspberry Pi for $5.95

The enthusiast below created custom cases in plastic through a shop in Akihabara. He wanted to use metal, but the cost was too high.